Machine Learning Attack Series: Image Scaling Attacks

This post is part of a series about machine learning and artificial intelligence. Click on the blog tag “huskyai” to see related posts.

- Overview: How Husky AI was built, threat modeled and operationalized

- Attacks: Some of the attacks I want to investigate, learn about, and try out

A few weeks ago while preparing demos for my GrayHat 2020 - Red Team Village presentation I ran across “Image Scaling Attacks” in Adversarial Preprocessing: Understanding and Preventing Image-Scaling Attacks in Machine Learning by Erwin Quiring, et al.

I thought that was so cool!

What is an image scaling attack?

The basic idea is to hide a smaller image inside a larger image (it should be about 5-10x the size). The attack is easy to explain actually:

- Attacker crafts a malicious input image by hiding the desired target image inside a benign image

- The image is loaded by the server

- Pre-processing resizes the image

- The server acts and makes decision based on a different image then intended

My goal was to hide a husky image inside another image:

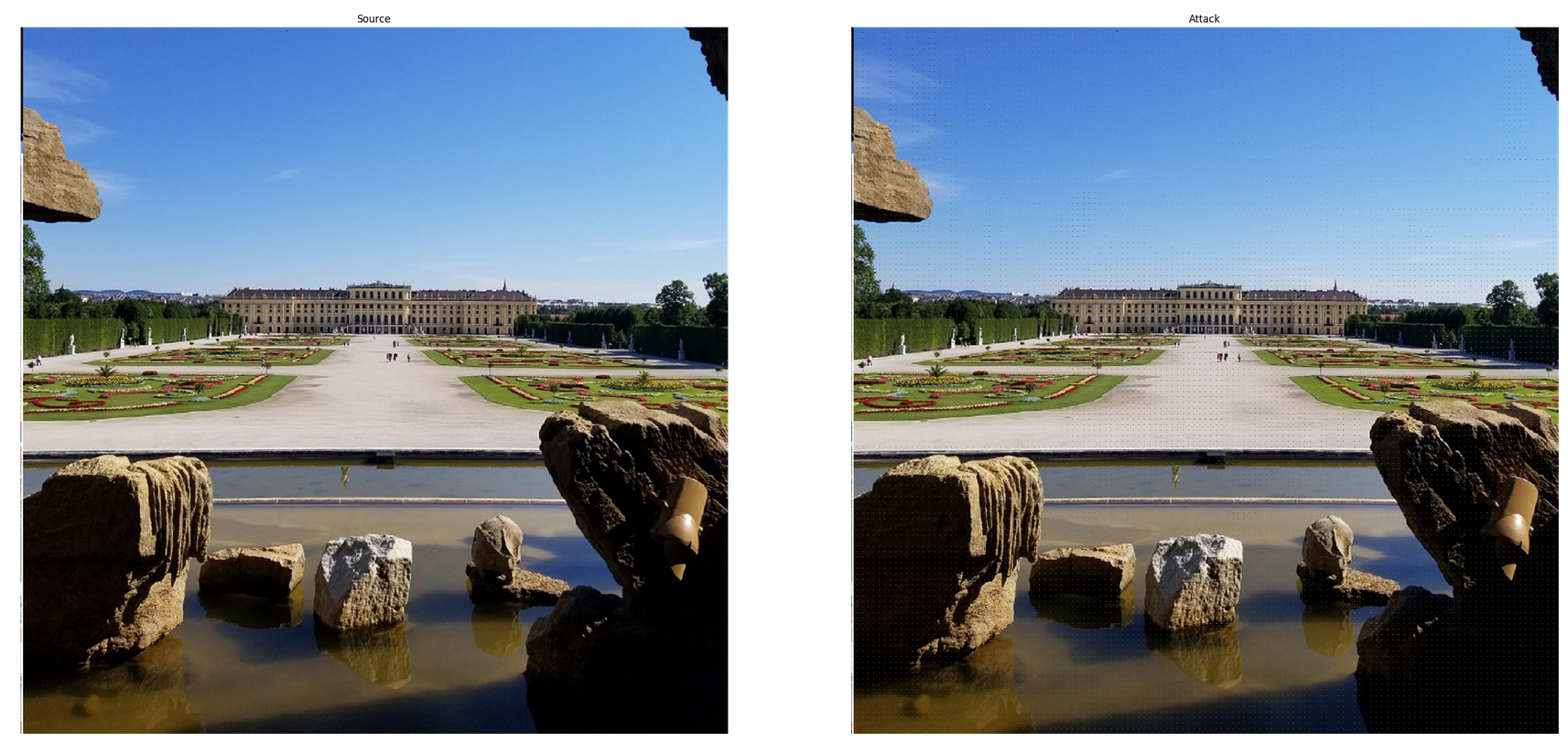

Here are the two images I used - before and after the modification:

If you look closely, you can see that the second image does have some strange dots all around. But this is not noticable when viewed in smaller version.

You can find the code on Github. I used Google Colab to run it, and there were some errors initialy but it worked - let me know if interested and I can clean up and share the Notebook also.

Rescaling and magic happens!

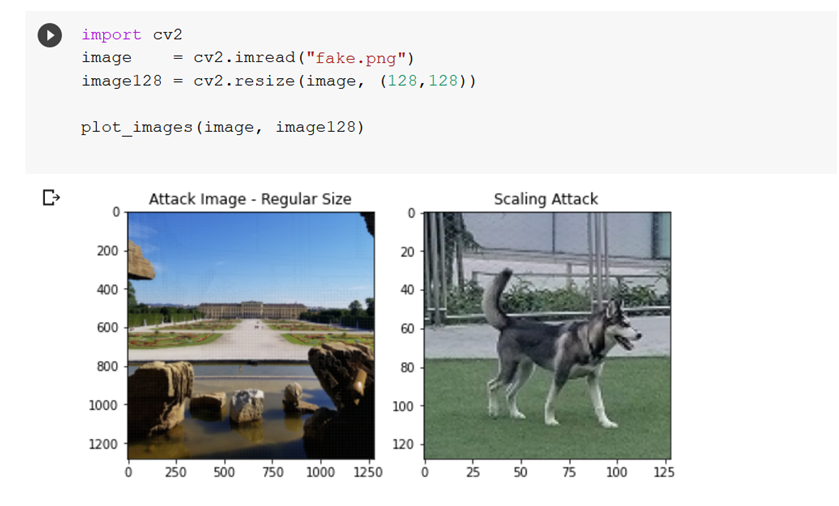

Now, look what happens when the image is loaded and resized with OpenCV using default settings:

On the left you can see the original sized image, and on the right the same image downsized to 128x128 pixels.

That’s amazing!

The downsized image is an entirely different picture now! Of course I picked a husky, since I wanted to attack “Husky AI” and find another bypass.

Implications

This can have a set of implications:

- Training process: Images that poisen the training data (as pre-processing rescales images)

- Model queries: The model might predict on a different image than the one the user uploaded

- Non ML related attacks: This can also be an issue in other, non machine learning areas.

I guess security never gets boring, there is always something new to learn.

Mitigations

Turns out that Husky AI uses PIL and that was not vulnerable to this attack by default.

I got lucky, because initially Husky AI did use OpenCV and it’s default settings to resize images. But for some reason I changed that early on (not knowing it would also mitigate this attack).

If you use OpenCV the issue can be fixed by using the interpolation argument when calling the resize API to change the default behavior.

Hope that was useful and interesting.

Cheers, Johann.

Twitter: @wunderwuzzi23

If you are interested in red teaming at large, check out my book Red Team Strategies.

References

- Adversarial Preprocessing: Understanding and Preventing Image-Scaling Attacks in Machine Learning (https://www.usenix.org/system/files/sec20-quiring.pdf) (Erwin Quiring, TU Braunschweig)

- https://github.com/EQuiw/2019-scalingattack

- https://scaling-attacks.net